= LSTM_modelApple.fit(LSTM_Xtrain1, LSTM_ytrain1, batch_size= 32 , epochs= 10 )= LSTM_modelGM.fit(LSTM_Xtrain2, LSTM_ytrain2, batch_size= 32 ,epochs= 10 )= LSTM_modelApple.evaluate(LSTM_Xtest1, LSTM_ytest1)= LSTM_modelApple.evaluate(LSTM_Xtest2, LSTM_ytest2)

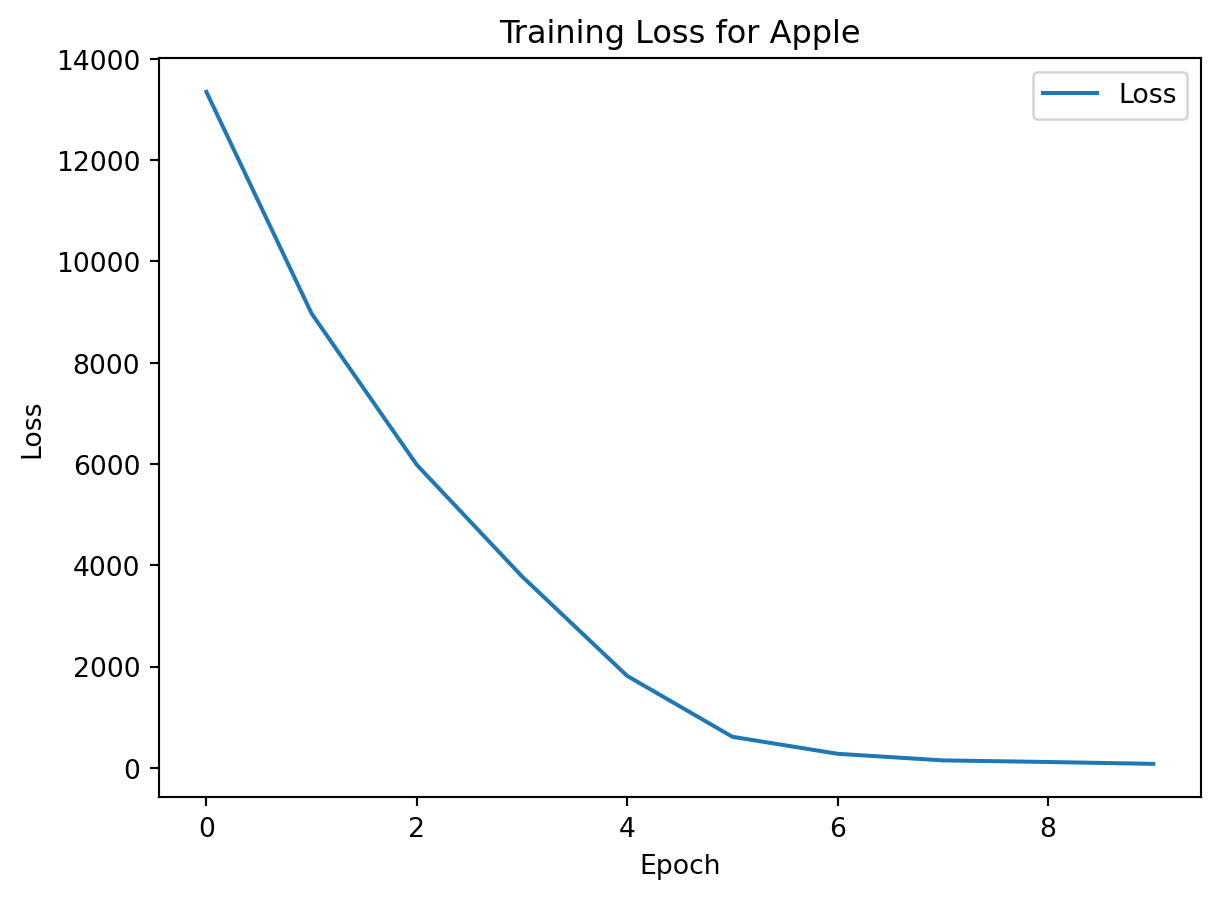

1/32 [..............................] - ETA: 4:16 - loss: 17372.1543

��������������������������������������������������������������������� 5/32 [===>..........................] - ETA: 0s - loss: 16331.5283

������������������������������������������������������������������� 8/32 [======>.......................] - ETA: 0s - loss: 16374.1855

�������������������������������������������������������������������12/32 [==========>...................] - ETA: 0s - loss: 15558.9639

�������������������������������������������������������������������15/32 [=============>................] - ETA: 0s - loss: 14998.8096

�������������������������������������������������������������������18/32 [===============>..............] - ETA: 0s - loss: 14541.5020

�������������������������������������������������������������������22/32 [===================>..........] - ETA: 0s - loss: 14132.0479

�������������������������������������������������������������������25/32 [======================>.......] - ETA: 0s - loss: 13811.5654

�������������������������������������������������������������������28/32 [=========================>....] - ETA: 0s - loss: 13601.0732

�������������������������������������������������������������������32/32 [==============================] - ETA: 0s - loss: 13349.0996

�������������������������������������������������������������������32/32 [==============================] - 9s 17ms/step - loss: 13349.0996

1/32 [..............................] - ETA: 0s - loss: 12088.0156

������������������������������������������������������������������� 4/32 [==>...........................] - ETA: 0s - loss: 10131.2559

������������������������������������������������������������������� 7/32 [=====>........................] - ETA: 0s - loss: 10400.6426

�������������������������������������������������������������������10/32 [========>.....................] - ETA: 0s - loss: 10416.9395

�������������������������������������������������������������������14/32 [============>.................] - ETA: 0s - loss: 10402.9678

�������������������������������������������������������������������17/32 [==============>...............] - ETA: 0s - loss: 9906.1523

������������������������������������������������������������������21/32 [==================>...........] - ETA: 0s - loss: 9835.1729

������������������������������������������������������������������24/32 [=====================>........] - ETA: 0s - loss: 9633.7900

������������������������������������������������������������������26/32 [=======================>......] - ETA: 0s - loss: 9416.2588

������������������������������������������������������������������29/32 [==========================>...] - ETA: 0s - loss: 9185.9824

������������������������������������������������������������������31/32 [============================>.] - ETA: 0s - loss: 9050.1436

������������������������������������������������������������������32/32 [==============================] - 1s 19ms/step - loss: 8971.8701

1/32 [..............................] - ETA: 0s - loss: 7065.3047

������������������������������������������������������������������ 4/32 [==>...........................] - ETA: 0s - loss: 6784.5234

������������������������������������������������������������������ 6/32 [====>.........................] - ETA: 0s - loss: 6476.1255

������������������������������������������������������������������ 9/32 [=======>......................] - ETA: 0s - loss: 6575.6855

������������������������������������������������������������������12/32 [==========>...................] - ETA: 0s - loss: 6704.9219

������������������������������������������������������������������15/32 [=============>................] - ETA: 0s - loss: 6598.4976

������������������������������������������������������������������18/32 [===============>..............] - ETA: 0s - loss: 6337.3784

������������������������������������������������������������������22/32 [===================>..........] - ETA: 0s - loss: 6312.4341

������������������������������������������������������������������26/32 [=======================>......] - ETA: 0s - loss: 6150.1538

������������������������������������������������������������������29/32 [==========================>...] - ETA: 0s - loss: 6081.9536

������������������������������������������������������������������32/32 [==============================] - 1s 19ms/step - loss: 5983.0947

1/32 [..............................] - ETA: 0s - loss: 5009.6162

������������������������������������������������������������������ 5/32 [===>..........................] - ETA: 0s - loss: 4661.3149

������������������������������������������������������������������ 8/32 [======>.......................] - ETA: 0s - loss: 4592.5669

������������������������������������������������������������������12/32 [==========>...................] - ETA: 0s - loss: 4622.8892

������������������������������������������������������������������15/32 [=============>................] - ETA: 0s - loss: 4430.5322

������������������������������������������������������������������19/32 [================>.............] - ETA: 0s - loss: 4212.4517

������������������������������������������������������������������22/32 [===================>..........] - ETA: 0s - loss: 4162.2598

������������������������������������������������������������������25/32 [======================>.......] - ETA: 0s - loss: 4049.9617

������������������������������������������������������������������29/32 [==========================>...] - ETA: 0s - loss: 3851.7612

������������������������������������������������������������������32/32 [==============================] - ETA: 0s - loss: 3779.9963

������������������������������������������������������������������32/32 [==============================] - 1s 16ms/step - loss: 3779.9963

1/32 [..............................] - ETA: 0s - loss: 3012.2642

������������������������������������������������������������������ 5/32 [===>..........................] - ETA: 0s - loss: 2920.1504

������������������������������������������������������������������ 9/32 [=======>......................] - ETA: 0s - loss: 2671.4116

������������������������������������������������������������������12/32 [==========>...................] - ETA: 0s - loss: 2367.4412

������������������������������������������������������������������16/32 [==============>...............] - ETA: 0s - loss: 2237.6772

������������������������������������������������������������������19/32 [================>.............] - ETA: 0s - loss: 2103.3667

������������������������������������������������������������������23/32 [====================>.........] - ETA: 0s - loss: 2006.5226

������������������������������������������������������������������27/32 [========================>.....] - ETA: 0s - loss: 1896.5040

������������������������������������������������������������������30/32 [===========================>..] - ETA: 0s - loss: 1839.5865

������������������������������������������������������������������32/32 [==============================] - 1s 17ms/step - loss: 1814.1063

1/32 [..............................] - ETA: 0s - loss: 1076.3989

������������������������������������������������������������������ 5/32 [===>..........................] - ETA: 0s - loss: 808.9908

����������������������������������������������������������������� 8/32 [======>.......................] - ETA: 0s - loss: 786.2999

�����������������������������������������������������������������12/32 [==========>...................] - ETA: 0s - loss: 800.5769

�����������������������������������������������������������������15/32 [=============>................] - ETA: 0s - loss: 800.2237

�����������������������������������������������������������������19/32 [================>.............] - ETA: 0s - loss: 729.4921

�����������������������������������������������������������������22/32 [===================>..........] - ETA: 0s - loss: 687.8390

�����������������������������������������������������������������26/32 [=======================>......] - ETA: 0s - loss: 646.7858

�����������������������������������������������������������������29/32 [==========================>...] - ETA: 0s - loss: 626.9469

�����������������������������������������������������������������32/32 [==============================] - 1s 17ms/step - loss: 612.1979

1/32 [..............................] - ETA: 0s - loss: 355.1623

����������������������������������������������������������������� 5/32 [===>..........................] - ETA: 0s - loss: 393.8134

����������������������������������������������������������������� 9/32 [=======>......................] - ETA: 0s - loss: 296.6349

�����������������������������������������������������������������11/32 [=========>....................] - ETA: 0s - loss: 308.9485

�����������������������������������������������������������������14/32 [============>.................] - ETA: 0s - loss: 288.6058

�����������������������������������������������������������������16/32 [==============>...............] - ETA: 0s - loss: 300.1110

�����������������������������������������������������������������19/32 [================>.............] - ETA: 0s - loss: 286.8965

�����������������������������������������������������������������22/32 [===================>..........] - ETA: 0s - loss: 273.4036

�����������������������������������������������������������������25/32 [======================>.......] - ETA: 0s - loss: 267.8954

�����������������������������������������������������������������28/32 [=========================>....] - ETA: 0s - loss: 286.3119

�����������������������������������������������������������������31/32 [============================>.] - ETA: 0s - loss: 278.6085

�����������������������������������������������������������������32/32 [==============================] - 1s 21ms/step - loss: 276.3178

1/32 [..............................] - ETA: 0s - loss: 120.0625

����������������������������������������������������������������� 4/32 [==>...........................] - ETA: 0s - loss: 125.1759

����������������������������������������������������������������� 6/32 [====>.........................] - ETA: 0s - loss: 131.6890

�����������������������������������������������������������������10/32 [========>.....................] - ETA: 0s - loss: 172.1349

�����������������������������������������������������������������13/32 [===========>..................] - ETA: 0s - loss: 163.6282

�����������������������������������������������������������������15/32 [=============>................] - ETA: 0s - loss: 153.0613

�����������������������������������������������������������������18/32 [===============>..............] - ETA: 0s - loss: 146.2348

�����������������������������������������������������������������22/32 [===================>..........] - ETA: 0s - loss: 154.1753

�����������������������������������������������������������������25/32 [======================>.......] - ETA: 0s - loss: 156.5999

�����������������������������������������������������������������29/32 [==========================>...] - ETA: 0s - loss: 151.3885

�����������������������������������������������������������������32/32 [==============================] - ETA: 0s - loss: 146.3327

�����������������������������������������������������������������32/32 [==============================] - 1s 20ms/step - loss: 146.3327

1/32 [..............................] - ETA: 0s - loss: 119.3513

����������������������������������������������������������������� 4/32 [==>...........................] - ETA: 0s - loss: 172.3398

����������������������������������������������������������������� 8/32 [======>.......................] - ETA: 0s - loss: 138.1938

�����������������������������������������������������������������11/32 [=========>....................] - ETA: 0s - loss: 147.8038

�����������������������������������������������������������������14/32 [============>.................] - ETA: 0s - loss: 130.9393

�����������������������������������������������������������������18/32 [===============>..............] - ETA: 0s - loss: 129.3018

�����������������������������������������������������������������21/32 [==================>...........] - ETA: 0s - loss: 125.3542

�����������������������������������������������������������������25/32 [======================>.......] - ETA: 0s - loss: 109.4559

�����������������������������������������������������������������28/32 [=========================>....] - ETA: 0s - loss: 111.7750

�����������������������������������������������������������������32/32 [==============================] - ETA: 0s - loss: 114.4378

�����������������������������������������������������������������32/32 [==============================] - 1s 18ms/step - loss: 114.4378

1/32 [..............................] - ETA: 0s - loss: 46.0500

���������������������������������������������������������������� 5/32 [===>..........................] - ETA: 0s - loss: 84.1470

���������������������������������������������������������������� 9/32 [=======>......................] - ETA: 0s - loss: 81.1383

����������������������������������������������������������������12/32 [==========>...................] - ETA: 0s - loss: 69.7995

����������������������������������������������������������������16/32 [==============>...............] - ETA: 0s - loss: 69.6852

����������������������������������������������������������������19/32 [================>.............] - ETA: 0s - loss: 62.7673

����������������������������������������������������������������23/32 [====================>.........] - ETA: 0s - loss: 56.2036

����������������������������������������������������������������27/32 [========================>.....] - ETA: 0s - loss: 78.7453

����������������������������������������������������������������30/32 [===========================>..] - ETA: 0s - loss: 75.4544

����������������������������������������������������������������32/32 [==============================] - 1s 17ms/step - loss: 77.5673

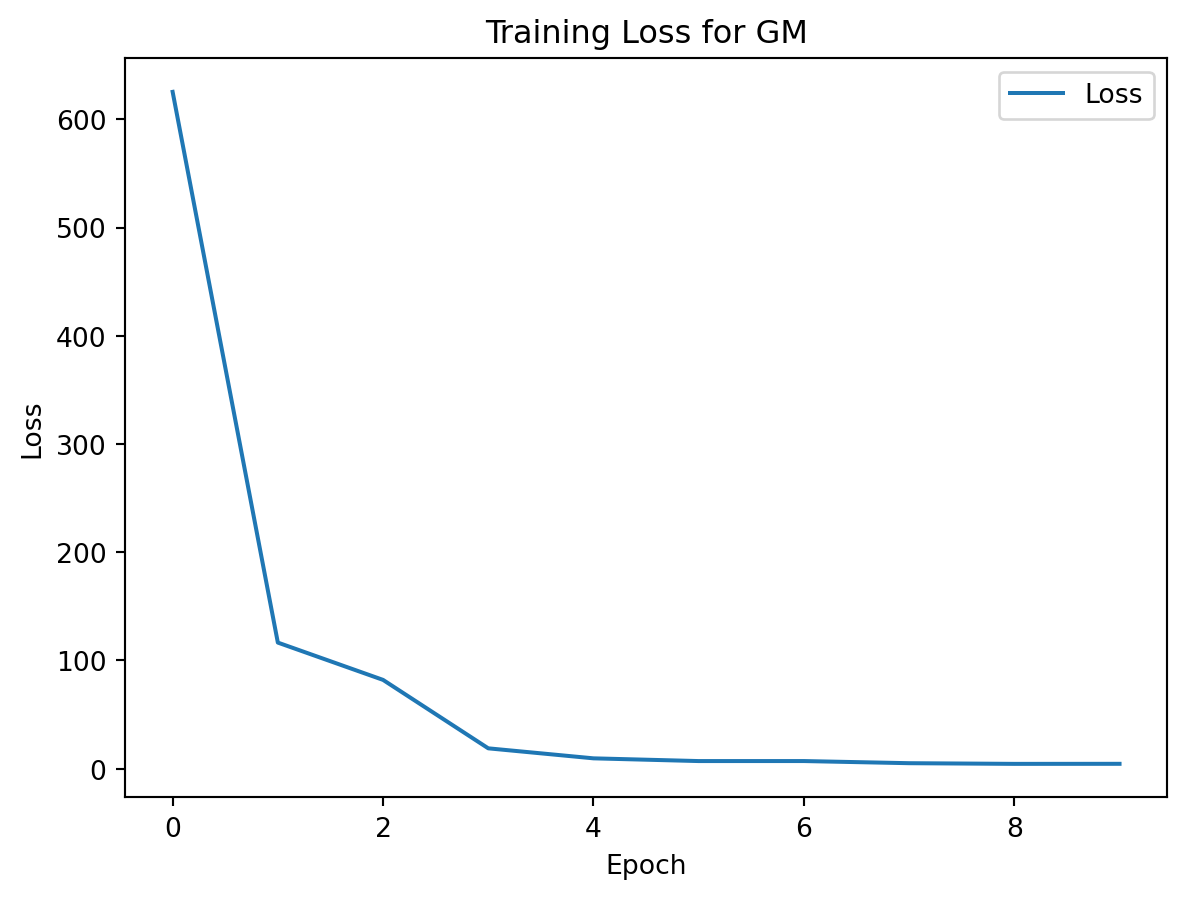

1/32 [..............................] - ETA: 4:21 - loss: 1837.0034

�������������������������������������������������������������������� 4/32 [==>...........................] - ETA: 0s - loss: 1538.7046

������������������������������������������������������������������ 7/32 [=====>........................] - ETA: 0s - loss: 1323.1244

������������������������������������������������������������������10/32 [========>.....................] - ETA: 0s - loss: 1185.5620

������������������������������������������������������������������14/32 [============>.................] - ETA: 0s - loss: 1000.8923

������������������������������������������������������������������17/32 [==============>...............] - ETA: 0s - loss: 914.9299

�����������������������������������������������������������������20/32 [=================>............] - ETA: 0s - loss: 834.9774

�����������������������������������������������������������������23/32 [====================>.........] - ETA: 0s - loss: 763.2570

�����������������������������������������������������������������26/32 [=======================>......] - ETA: 0s - loss: 708.8199

�����������������������������������������������������������������29/32 [==========================>...] - ETA: 0s - loss: 658.5297

�����������������������������������������������������������������32/32 [==============================] - 9s 18ms/step - loss: 625.3185

1/32 [..............................] - ETA: 0s - loss: 122.2365

����������������������������������������������������������������� 4/32 [==>...........................] - ETA: 0s - loss: 150.1059

����������������������������������������������������������������� 7/32 [=====>........................] - ETA: 0s - loss: 149.6483

�����������������������������������������������������������������10/32 [========>.....................] - ETA: 0s - loss: 136.2927

�����������������������������������������������������������������13/32 [===========>..................] - ETA: 0s - loss: 129.1012

�����������������������������������������������������������������16/32 [==============>...............] - ETA: 0s - loss: 127.4902

�����������������������������������������������������������������19/32 [================>.............] - ETA: 0s - loss: 125.5300

�����������������������������������������������������������������23/32 [====================>.........] - ETA: 0s - loss: 123.8954

�����������������������������������������������������������������26/32 [=======================>......] - ETA: 0s - loss: 119.0399

�����������������������������������������������������������������29/32 [==========================>...] - ETA: 0s - loss: 117.3208

�����������������������������������������������������������������32/32 [==============================] - 1s 18ms/step - loss: 116.4834

1/32 [..............................] - ETA: 0s - loss: 76.3379

���������������������������������������������������������������� 5/32 [===>..........................] - ETA: 0s - loss: 113.3945

����������������������������������������������������������������� 8/32 [======>.......................] - ETA: 0s - loss: 104.7033

�����������������������������������������������������������������12/32 [==========>...................] - ETA: 0s - loss: 99.5192

����������������������������������������������������������������15/32 [=============>................] - ETA: 0s - loss: 99.4331

����������������������������������������������������������������18/32 [===============>..............] - ETA: 0s - loss: 99.8669

����������������������������������������������������������������19/32 [================>.............] - ETA: 0s - loss: 97.8378

����������������������������������������������������������������21/32 [==================>...........] - ETA: 0s - loss: 97.5575

����������������������������������������������������������������23/32 [====================>.........] - ETA: 0s - loss: 95.3459

����������������������������������������������������������������24/32 [=====================>........] - ETA: 0s - loss: 93.4168

����������������������������������������������������������������26/32 [=======================>......] - ETA: 0s - loss: 90.5579

����������������������������������������������������������������28/32 [=========================>....] - ETA: 0s - loss: 87.4903

����������������������������������������������������������������30/32 [===========================>..] - ETA: 0s - loss: 83.8176

����������������������������������������������������������������32/32 [==============================] - 1s 27ms/step - loss: 82.0155

1/32 [..............................] - ETA: 0s - loss: 48.6507

���������������������������������������������������������������� 4/32 [==>...........................] - ETA: 0s - loss: 38.1192

���������������������������������������������������������������� 8/32 [======>.......................] - ETA: 0s - loss: 27.5723

����������������������������������������������������������������11/32 [=========>....................] - ETA: 0s - loss: 34.1333

����������������������������������������������������������������14/32 [============>.................] - ETA: 0s - loss: 29.5873

����������������������������������������������������������������18/32 [===============>..............] - ETA: 0s - loss: 24.4884

����������������������������������������������������������������22/32 [===================>..........] - ETA: 0s - loss: 24.0827

����������������������������������������������������������������25/32 [======================>.......] - ETA: 0s - loss: 22.2559

����������������������������������������������������������������29/32 [==========================>...] - ETA: 0s - loss: 19.7648

����������������������������������������������������������������32/32 [==============================] - ETA: 0s - loss: 18.7865

����������������������������������������������������������������32/32 [==============================] - 1s 18ms/step - loss: 18.7865

1/32 [..............................] - ETA: 0s - loss: 3.7948

��������������������������������������������������������������� 4/32 [==>...........................] - ETA: 0s - loss: 21.8120

���������������������������������������������������������������� 8/32 [======>.......................] - ETA: 0s - loss: 18.0318

����������������������������������������������������������������11/32 [=========>....................] - ETA: 0s - loss: 14.2899

����������������������������������������������������������������14/32 [============>.................] - ETA: 0s - loss: 11.3856

����������������������������������������������������������������16/32 [==============>...............] - ETA: 0s - loss: 10.3315

����������������������������������������������������������������19/32 [================>.............] - ETA: 0s - loss: 9.0750

���������������������������������������������������������������21/32 [==================>...........] - ETA: 0s - loss: 8.5643

���������������������������������������������������������������25/32 [======================>.......] - ETA: 0s - loss: 11.3445

����������������������������������������������������������������28/32 [=========================>....] - ETA: 0s - loss: 10.5231

����������������������������������������������������������������31/32 [============================>.] - ETA: 0s - loss: 9.6392

���������������������������������������������������������������32/32 [==============================] - 1s 20ms/step - loss: 9.5347

1/32 [..............................] - ETA: 0s - loss: 2.9909

��������������������������������������������������������������� 5/32 [===>..........................] - ETA: 0s - loss: 9.0648

��������������������������������������������������������������� 8/32 [======>.......................] - ETA: 0s - loss: 7.1460

���������������������������������������������������������������11/32 [=========>....................] - ETA: 0s - loss: 6.0822

���������������������������������������������������������������14/32 [============>.................] - ETA: 0s - loss: 5.8618

���������������������������������������������������������������17/32 [==============>...............] - ETA: 0s - loss: 7.6267

���������������������������������������������������������������21/32 [==================>...........] - ETA: 0s - loss: 7.1269

���������������������������������������������������������������24/32 [=====================>........] - ETA: 0s - loss: 6.9196

���������������������������������������������������������������28/32 [=========================>....] - ETA: 0s - loss: 6.8491

���������������������������������������������������������������31/32 [============================>.] - ETA: 0s - loss: 7.0058

���������������������������������������������������������������32/32 [==============================] - 1s 18ms/step - loss: 7.0184

1/32 [..............................] - ETA: 0s - loss: 0.7666

��������������������������������������������������������������� 4/32 [==>...........................] - ETA: 0s - loss: 0.9764

��������������������������������������������������������������� 7/32 [=====>........................] - ETA: 0s - loss: 1.0525

���������������������������������������������������������������11/32 [=========>....................] - ETA: 0s - loss: 5.3332

���������������������������������������������������������������14/32 [============>.................] - ETA: 0s - loss: 7.0660

���������������������������������������������������������������18/32 [===============>..............] - ETA: 0s - loss: 5.8636

���������������������������������������������������������������21/32 [==================>...........] - ETA: 0s - loss: 5.1971

���������������������������������������������������������������24/32 [=====================>........] - ETA: 0s - loss: 5.3237

���������������������������������������������������������������27/32 [========================>.....] - ETA: 0s - loss: 6.6567

���������������������������������������������������������������31/32 [============================>.] - ETA: 0s - loss: 7.1097

���������������������������������������������������������������32/32 [==============================] - 1s 17ms/step - loss: 7.0276

1/32 [..............................] - ETA: 0s - loss: 1.3230

��������������������������������������������������������������� 4/32 [==>...........................] - ETA: 0s - loss: 0.9858

��������������������������������������������������������������� 7/32 [=====>........................] - ETA: 0s - loss: 1.0421

���������������������������������������������������������������11/32 [=========>....................] - ETA: 0s - loss: 1.7510

���������������������������������������������������������������15/32 [=============>................] - ETA: 0s - loss: 6.0605

���������������������������������������������������������������18/32 [===============>..............] - ETA: 0s - loss: 5.2633

���������������������������������������������������������������22/32 [===================>..........] - ETA: 0s - loss: 4.4619

���������������������������������������������������������������25/32 [======================>.......] - ETA: 0s - loss: 4.0003

���������������������������������������������������������������29/32 [==========================>...] - ETA: 0s - loss: 3.5631

���������������������������������������������������������������32/32 [==============================] - 1s 17ms/step - loss: 5.0499

1/32 [..............................] - ETA: 0s - loss: 21.8037

���������������������������������������������������������������� 4/32 [==>...........................] - ETA: 0s - loss: 6.2209

��������������������������������������������������������������� 8/32 [======>.......................] - ETA: 0s - loss: 3.3317

���������������������������������������������������������������12/32 [==========>...................] - ETA: 0s - loss: 2.5420

���������������������������������������������������������������15/32 [=============>................] - ETA: 0s - loss: 3.1021

���������������������������������������������������������������19/32 [================>.............] - ETA: 0s - loss: 4.5324

���������������������������������������������������������������22/32 [===================>..........] - ETA: 0s - loss: 4.0848

���������������������������������������������������������������26/32 [=======================>......] - ETA: 0s - loss: 3.8783

���������������������������������������������������������������29/32 [==========================>...] - ETA: 0s - loss: 4.2641

���������������������������������������������������������������32/32 [==============================] - ETA: 0s - loss: 4.4300

���������������������������������������������������������������32/32 [==============================] - 1s 17ms/step - loss: 4.4300

1/32 [..............................] - ETA: 0s - loss: 2.6413

��������������������������������������������������������������� 4/32 [==>...........................] - ETA: 0s - loss: 2.7335

��������������������������������������������������������������� 7/32 [=====>........................] - ETA: 0s - loss: 4.8462

���������������������������������������������������������������11/32 [=========>....................] - ETA: 0s - loss: 4.9473

���������������������������������������������������������������14/32 [============>.................] - ETA: 0s - loss: 4.0697

���������������������������������������������������������������18/32 [===============>..............] - ETA: 0s - loss: 3.4611

���������������������������������������������������������������21/32 [==================>...........] - ETA: 0s - loss: 5.2105

���������������������������������������������������������������24/32 [=====================>........] - ETA: 0s - loss: 5.5671

���������������������������������������������������������������28/32 [=========================>....] - ETA: 0s - loss: 4.9047

���������������������������������������������������������������31/32 [============================>.] - ETA: 0s - loss: 4.5141

���������������������������������������������������������������32/32 [==============================] - 1s 17ms/step - loss: 4.4796

1/8 [==>...........................] - ETA: 19s - loss: 142.3585

����������������������������������������������������������������8/8 [==============================] - ETA: 0s - loss: 138.4048

���������������������������������������������������������������8/8 [==============================] - 3s 10ms/step - loss: 138.4048

1/8 [==>...........................] - ETA: 0s - loss: 24.5946

��������������������������������������������������������������7/8 [=========================>....] - ETA: 0s - loss: 22.9925

��������������������������������������������������������������8/8 [==============================] - 0s 8ms/step - loss: 22.5986